The first part of the article is available here.

After the global shift to distance learning in 2020, international studies quickly documented a noticeable decline in academic performance, particularly in mathematics (Goldhaber et al., 2022; Lichand et al., 2022). One of the key factors shaping the extent of these losses was the length of time students spent learning online. The relationship proved to be nonlinear: short-term school closures (up to eight weeks), as seen in well-prepared systems such as Switzerland, Denmark, and Australia, had virtually no effect on outcomes (Tomasik et al., 2020; Birkelund & Karlson, 2021; Gore et al., 2021). Yet once remote learning extended to about half a year, educational losses began to increase sharply (Schult et al., 2022; Goldhaber et al., 2022, 2023). Some researchers, including Engzell et al. (2021), argue that these losses may have been temporary and concentrated mainly at the beginning of the pandemic, when schools and teachers had not yet fully adapted to the new format.

Educational losses have also been highly uneven. They differ not only across countries but even among individual schools within the same system, depending on their readiness for online learning and the quality of digital infrastructure. The losses were particularly severe among the most vulnerable groups: students from low socio-economic backgrounds, members of ethnic minorities, and those who had already shown lower academic performance before the pandemic (Engzell et al., 2020; Goldhaber et al., 2022, 2023; Singh et al., 2022; Maldonado & Witte, 2021; Kennedy & Strietholt, 2023; Jakubowski et al., 2024).

In most systems, achievement gaps tied to social and ethnic factors have widened. Crucially, these disparities did not automatically disappear after the return to in-person learning, particularly for vulnerable students (Lichand & Doria, 2024; Peters et al., 2024). Noticeable recovery occurred only where intensive programs were introduced, such as structured tutoring in India or targeted support measures in Australia (Gore et al., 2021; Miller et al., 2023; Singh et al., 2022). In some cases, compensating for a single year of remote learning required as much as two additional years of effort (Lichand & Doria, 2024; Singh et al., 2022). McKinsey (2023) estimates that if recovery depends solely on the ordinary pace of learning, without extra interventions, returning to pre-COVID performance levels could take decades.

Similar patterns have been observed in Ukraine. According to the Ukrainian Center for Educational Quality Assessment’s report on the results of the Nationwide External Monitoring of the Quality of Primary Education, primary school graduates who studied remotely for less than one month in 2020/2021 achieved an average score of 200.4 in mathematics and 201.4 in reading (on the 100–300 scale). Among those who studied online for one to two months, the scores were lower—199.5 in mathematics and 198.4 in reading—while for students who studied remotely for three to four months, the decline was even steeper, with averages of 192.8 and 194.5 respectively. This represents a substantial drop over a short period. The data suggest an almost linear decrease in learning outcomes as the duration of remote learning increases. At the same time, the authors of the study stress that not only the length of remote instruction but also its organization is critical: regular scheduling and attendance of online lessons, teacher explanations, and access to adequate technical resources.

At the same time, the 2024 monitoring conducted by the State Service of Education Quality of Ukraine found that students learning remotely did not lag behind their peers studying in person. A survey of sixth- and eighth-grade students showed that in Ukrainian language, the results of online learners did not differ from those of students attending in person, while in mathematics they were even slightly higher (Grade 6—5.61 vs. 6.87; Grade 8—6.29 vs. 6.93, respectively). However, students studying online have greater opportunities to copy answers, which may limit the validity of direct comparisons between the two formats.

Access to digital technologies is a key factor in reducing learning losses under remote instruction, enabling students to fully participate in lessons and complete assignments. Data from the Nationwide External Monitoring of the Quality of Primary Education (2021) show that students facing technical barriers (lack of or poor-quality device, weak internet connection, limited digital skills) scored 5–8 points lower in mathematics and reading than their peers without such barriers (USEQA, 2021). Since technical barriers may stem from low parental income, in the subsequent analysis we included the family’s financial situation as a control variable.

Data from the Ukrainian Center for Educational Quality Assessment (UCEQA) based on PISA-2022 results in Ukraine (UCEQA, 2024a, 2024b) also confirm the importance of access to digital devices and the internet for academic performance. In Ukraine, about 60% of students reported that their schools had been closed for more than three months during the COVID-19 pandemic—significantly more than in most reference countries, that is, countries comparable to Ukraine in socio-economic and cultural characteristics such as Bulgaria, Georgia, Estonia, Moldova, Poland, and Slovakia.

Access to devices proved critical: students who relied solely on a smartphone scored 32 points lower in mathematics (~0.32 standard deviations, SD) than those studying with their own laptop or computer. Device ownership also made a significant difference: students who had to share a device with family members scored 72 points lower in mathematics—comparable to the outcomes of those using school-provided devices (–74 points, ~0.74 SD) and those with no device at all (–82 points, ~0.82 SD).

In the above-mentioned UCEQA studies, principals at more than half of Ukrainian schools reported that the lack of devices and internet was the greatest obstacle to organizing remote learning—the highest share among the comparison countries. At the same time, Ukraine showed one of the widest gaps in students’ confidence in their ability to learn independently between groups of different socio-economic status. Students from large cities reported a much better experience of remote learning than those from smaller towns and villages, indicating a deepening of inequalities by place of residence.

Other international studies also confirm this effect. In PIRLS, the assessment of reading literacy among fourth graders, the decline during COVID was greater for children without a computer at home (Kennedy & Strietholt, 2023). A panel study in India recorded smaller losses where families had resources for home or online learning (Guariso & Nyqvist, 2023). In systems with shorter school closures and stronger digital readiness—such as Switzerland, Denmark, and Australia—average losses were minimal (Tomasik et al., 2020; Miller et al, 2024; Gore et al., 2021; Engzell et al., 2021). By contrast, in São Paulo, Brazil, losses were much larger in poorer schools and among students with less prior online learning experience (Lichand et al., 2022). Overall, access to devices and stable internet, together with well-structured digital organization of instruction, substantially mitigates the impact of remote education on learning outcomes.

Hypotheses

Despite a number of individual studies in Ukraine, there is still a lack of research that systematically examines how the interplay of learning format, access to digital devices, and quality of internet connection affects the academic outcomes of secondary school students. Most existing work focuses on the COVID-19 pandemic period, while contemporary studies on remote and blended learning under the conditions of full-scale war are nearly absent. This study takes a step toward addressing that gap.

In our previous publication, we described which platforms and messengers are most commonly used by 13–16-year-old students studying remotely, as well as the situation regarding access to devices and the internet. In this article, we examine whether digital access influences the effectiveness of remote and blended learning and, if so, in what ways. We test the following hypotheses:

- Students in blended formats achieve better educational outcomes than those studying fully online, but weaker outcomes than those studying fully in person.

Research indicates that even partial returns to in-person learning or flexible hybrid models substantially reduce learning losses, as documented in the United States and Brazil (Halloran et al., 2021; Lichand et al., 2022). Accordingly, students in blended formats would be expected to achieve higher outcomes than those studying exclusively online.

- Students with their own digital devices (tablet, laptop, or computer) achieve higher outcomes than those without such access.

Access to digital devices and stable internet is another crucial factor that can substantially mitigate learning losses. For example, the widespread availability of high-speed internet in the Netherlands helped keep average losses considerably lower compared to countries with weaker coverage (Engzell et al., 2020; Singh et al., 2022).

- Students who use learning platforms in combination with messengers achieve better outcomes than those who rely on messengers alone.

Research suggests that prior preparation of school systems for online learning also has a positive impact, reducing learning losses. For instance, municipalities in Brazil that had already been using digital platforms before the pandemic achieved stronger results (Lichand et al., 2022).

Data and methodology

This article is based on data from a survey conducted in March 2025 as part of the study “Future Index: Professional Expectations and Development of Adolescents in Ukraine.” The survey used a mixed CATI-CAWI method: mobile numbers were first randomly selected for telephone interviews with adults (18+) who had children of the target age. After confirming eligibility and obtaining parental consent, the child was invited to complete an online questionnaire.

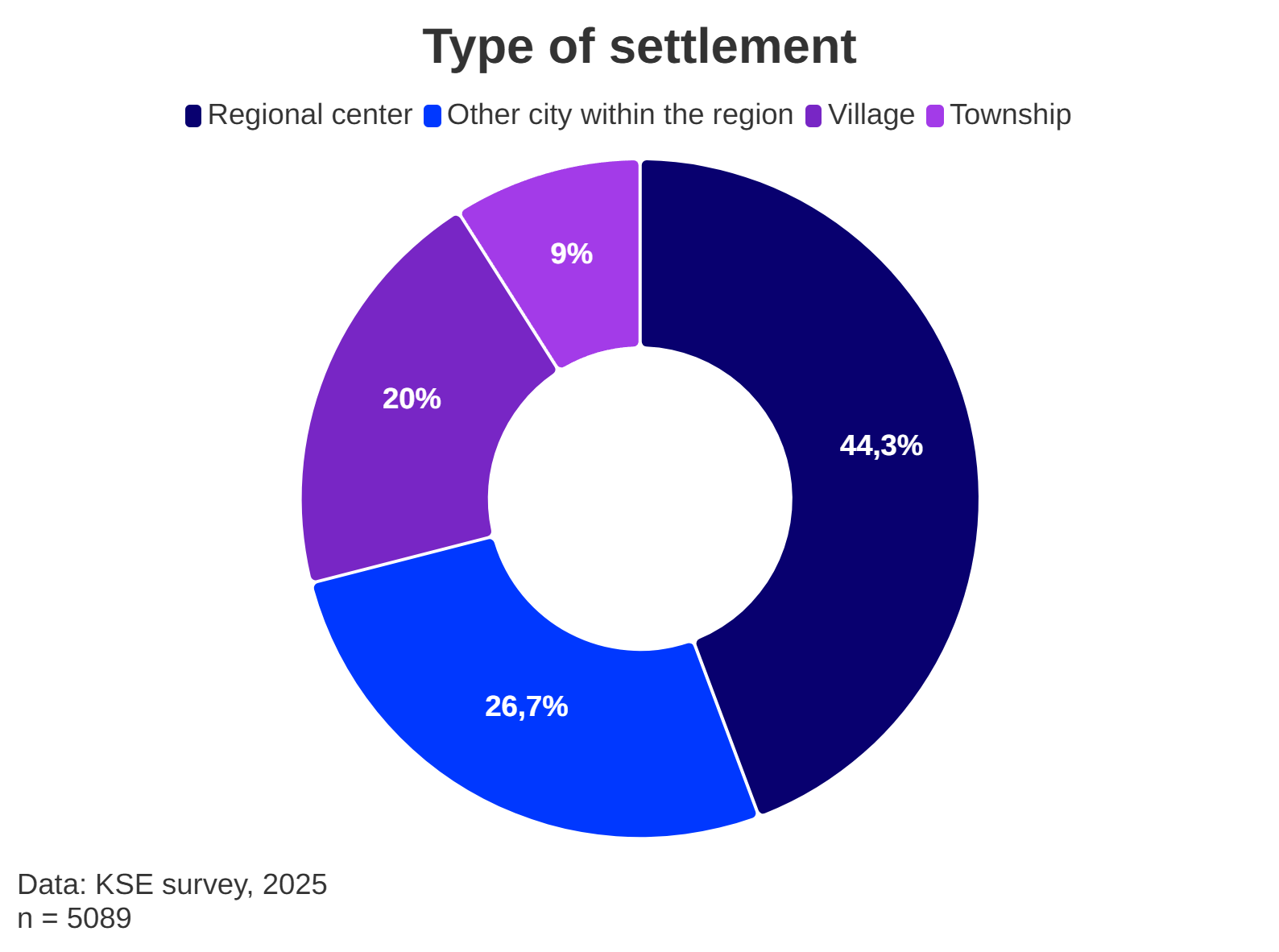

The study sample consisted of 5,089 students aged 13–16 from across Ukraine, excluding the occupied territories. In addition, parents or other legal guardians were surveyed, and their responses were used to assess the family’s socio-economic status. Among the respondents, 2,351 students were studying in online or blended formats. Further details on the sample are provided in Appendices 1.1, 1.2, and 1.3.

Compared to official MES data[1], the share of students in the sample who studied in person was noticeably smaller—53.8% versus 69.2%. At the same time, the sample reflects the structure of the overall student population. In particular, it shows high representativeness by geographic indicators.

- Distribution by region: the maximum deviation from MES data was +1.8 percentage points (overrepresentation of the Center) and –1.4 percentage points (underrepresentation of the West);

- Distribution by type of settlement: the deviation did not exceed 1 percentage point, with a slight shift toward cities.

The dependent variable is educational outcomes, measured as the average grades in Ukrainian language, mathematics, physics, chemistry, biology, English, and computer science. These subjects were selected because they are compulsory in all secondary schools. Ukrainian language represents the humanities track; mathematics and the natural sciences (physics, chemistry, and biology) represent the science and mathematics track; English is the principal foreign language; and computer science reflects students’ level of digital skills.

Students’ responses to the question, “What grades did you receive last semester in the following subjects?” constitute our dependent variable. We evaluated the effects of learning format, access to devices and the internet, and the use of online platforms and messengers, while controlling for type of educational institution, place of residence, and household income.

It should be noted that school grades are not uniformly criterion-referenced and often reflect the internal standards of a particular school. In practice, they are largely “norm-referenced”: a grade depends on the level of expectations within a given class or school and indicates more the student’s relative standing among peers than an exact measure of knowledge. Consequently, identical grades in different institutions may correspond to very different levels of preparation. In addition, the grades are based on students’ self-reports, which may introduce inaccuracies due to exaggeration or recall errors.

The format of learning was determined based on responses to the survey question: “Please indicate the format of instruction at your school.” Respondents were offered three options:

- Instruction takes place in the usual mode (in person);

- Instruction takes place remotely (online);

- Instruction takes place in a blended format.

Access to digital devices was operationalized through the question: “Which devices do you use for distance learning? Select all that apply.” The response options were: Computer, Laptop, Tablet, Smartphone, Other, None of the above. For each selected device, respondents were asked to indicate ownership: Mine, Belongs to a parent, Belongs to a sibling, Family-owned (shared), Other.

On this basis, a variable with four categories was constructed:

- No devices;

- Phone only;

- Shared tablet/PC/laptop;

- Own tablet/PC/laptop.

Internet stability was operationalized through the question: “Do you have stable internet access at home for online lessons?”

Respondents were offered five answer options:

- Yes, the internet works well;

- Yes, but there are occasional problems not related to power outages;

- Yes, there are problems, but these are related to power outages;

- No, the internet often does not work;

- No, there is no internet at home.

For analysis, these responses were recoded into a dichotomous indicator: stable (response 1) versus unstable/absent (responses 2–5, reference category).

Use of online platforms was measured through the question: “Which digital platform do you use most often for distance learning? Select all that apply.” The options included: Viber, Telegram, Google Classroom, Moodle, All-Ukrainian Online School (AUOS), and Other (unspecified). Separate options for Google Meet and Zoom were not provided; responses mentioning these services may have been classified as Other. This should be taken into account when interpreting the findings on platform use.

Based on combinations of responses, a variable with three categories was constructed:

- Messaging applications only (Viber, Telegram) (reference category);

- Educational platforms only (Google Classroom, Moodle, AUOS);

- Both messaging applications and educational platforms.

All models include basic socio-demographic characteristics: region, type of settlement (regional center, other city, village, or township), type of educational institution, the family’s financial situation as self-assessed by parents, and the student’s gender and age.

The type of educational institution was determined from parents’ or legal guardians’ responses to the question: “Please state the type of school your child attends.” The options included: (1) military lyceum, (2) military-sports lyceum, (3) gymnasium, (4) lyceum, (5) lyceum with advanced military-physical training, (6) arts college, (7) arts lyceum, (8) educational-rehabilitation center, (9) science lyceum, (10) special school, (11) sports lyceum, (12) specialized school, (13) general secondary (regular) school, as well as “other” and “difficult to answer.” Because some groups were too small for analysis, the types of educational institutions were merged into the following categories: lyceum = 1, 2, 4, 5, 7, 9, 11; gymnasium = 3; special school = 6, 10, 12; general secondary school = 13. This classification reflects the system in place prior to the school reform. Currently, a lyceum refers to any school that provides instruction in grades 10–11(12).

In addition, family circumstances were taken into account: parents’ level of education (grouped for analysis into three categories: “general secondary,” “vocational/professional,” and “higher”), whether the student’s family changed their place of residence after the full-scale invasion, and whether any family members serve in the Armed Forces of Ukraine.

Information on grades reported by students may be inaccurate due to social desirability bias toward higher marks or simple forgetfulness, which should be considered a limitation of the study. At the same time, since we analyze students surveyed with the same questionnaire, this should not have a substantial effect on differences between them. Other limitations may include varying grading practices at the school level and tolerance of cheating among students. We tested whether our data contained signs of grade misreporting. Specifically, we used the Kolmogorov–Smirnov test to compare grade distributions across different learning formats and examined whether the share of students with the highest marks (12 and ≥10) differed by format. We found no systematic deviations that would suggest grades were easier to obtain in online learning.

Further details of the methodology can be found in the first part of our article.

Findings

Students studying in person demonstrate the highest results, while the lowest results are observed among those in blended formats (Hypothesis 1 not confirmed).

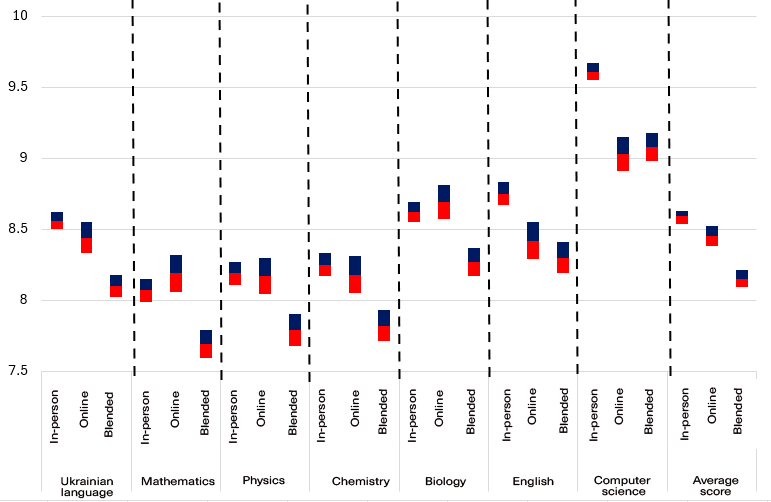

We begin with a basic overview of differences in academic performance by learning format (Figure 1). The differences in average grades across formats are small but statistically significant—the gap between in-person and blended learning amounts to 0.4 points.

Figure 1. Average student grades by learning format

The highest average grades are observed among students studying in person. However, they outperform online learners only in English (+0.3 points) and computer science (+0.6 points), while for most other subjects the differences are negligible (see Appendix 2.1).

By contrast, students in blended formats fall behind in-person learners by 0.3–0.6 points across all subjects, and behind online learners by 0.2–0.4 points in all subjects except English and computer science.

Regression analysis (Table 1, Model 1) shows that the blended learning format remains a consistent and statistically significant predictor of lower performance even when other variables are controlled for. Students in blended formats score on average 0.25 points lower than those in in-person learning (p < 0.001), while the differences between remote and in-person formats are not statistically significant.

When analyzing scores by individual subjects, the remote format emerges as a positive predictor of performance in Ukrainian language, mathematics, physics, and biology, and as non-significant for chemistry, English, and computer science (see Appendix 3.1). Overall, the selected factors exert a similar influence on grades across all subjects except English, where settlement type also plays a role: students from villages and townships perform worse in foreign language.

One possible explanation for differences in students’ grades is the influence of perceived safety. In regions where the security situation permits, most students attend school in person, while schools operating in remote or blended formats are primarily located closer to the front line. Among those studying in blended formats, many live in genuinely dangerous areas yet continue to attend classes.

Contrary to this assumption, a direct comparison of students’ subjective sense of safety shows a different picture (Figure 2). Students attending school in person report feeling the safest, while those studying online feel the least safe. Therefore, the subjective sense of safety does not account for the lower academic performance of students in blended formats; rather, its effect can be explained by objective factors—namely, the region of residence and schooling.

Figure 2. Students’ sense of safety by learning format

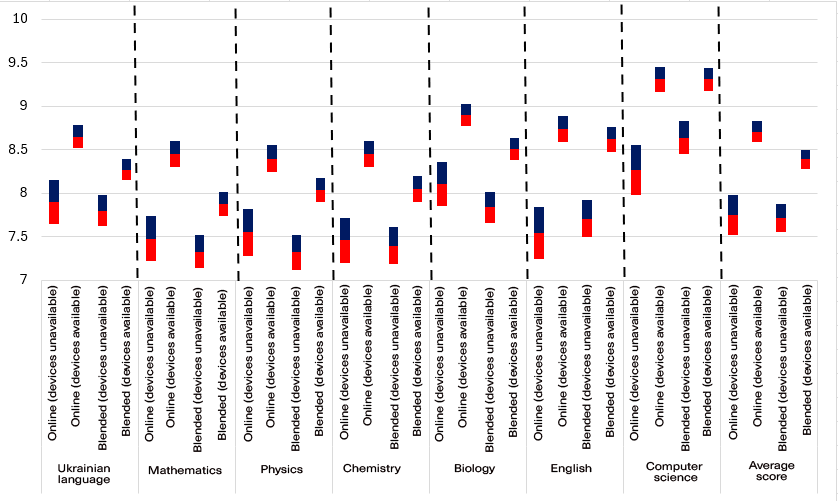

Students who use digital devices achieve higher scores than those who do not. Among students with access to devices, those studying online perform better than their equally equipped peers in blended formats (Hypothesis 2 not refuted).

The difference in academic outcomes between students in blended and online formats can be explained in part by lower access to digital devices. As noted in our previous publication, 65% of students in blended formats have access to such devices, compared to 71.6% of students studying online.

Figure 3. Average student grades by learning format and access to a computer or laptop

Indeed, when access to digital devices is taken into account, the picture changes considerably: online students with devices perform even better than their in-person peers in the natural sciences—mathematics, biology, physics, and chemistry (see Figure 3). Paradoxically, the only subject in which in-person students still outperform device-equipped online students is computer science.

At the same time, students in blended formats continue to fall behind even when they have devices. They perform worse than online students with devices in all subjects except English and computer science. Among students without devices, the difference between formats nearly disappears: online and blended learners show small subject-specific variations in grades, but their average score is the same.

Regression analysis (see Table 1, Model 2), limited to students in remote and blended formats, shows that the main driver of academic performance is access to digital devices. Compared to those with only a smartphone, students with their own or even a family computer or tablet score on average 0.5–0.8 points higher, whereas the absence of any device has a strongly negative effect (about –1 point, p < 0.001). By contrast, internet stability has no statistically significant effect.

The blended format is associated with lower results (by 0.32 points) even among students with access to both the internet and devices. The combination of online learning and in-school attendance may create additional challenges that are not offset by technical resources. Overall, Model 2 explains about 19% of the variation in performance, compared to 16% in Model 1 (see Table 1, Model 1), underscoring the importance of digital access.

The lag of students in blended formats is observed across all subjects except computer science, where the difference is not statistically significant (see Appendix 3.2). At the same time, performance in computer science is most strongly affected by access to digital devices (up to 2.56 points).

Similar results were obtained in the study by Kleinke & Cross (2022a, 2022b) on a sample of primary school students in the United States: students in blended formats consistently performed worse than those in either remote or in-person formats, even when their prior achievement levels were the same before the introduction of different modes of learning. The authors attribute this to environmental factors (schedule stability, consistency of teaching approaches, support at home) and developmental factors (self-regulation, discipline, and time management). In hybrid formats, these advantages are undermined by frequent changes in conditions, intermittent contact with teachers, and difficulties in coordinating home and school learning.

Comparison with primary school has its limitations due to the different psychological and developmental characteristics of students. A similar pattern is nevertheless observed in Ukraine among lower secondary students. According to a study by the State Service of Education Quality of Ukraine (2024), 6th- and 8th-grade students who studied in person or remotely achieved slightly higher results in Ukrainian language (median 6.45) than those in blended formats (median 6.35).

These findings partly align with the results of Lipien et al. (2023), who observed that students enrolled in a state virtual school and studying fully online even before the pandemic continued to make progress during COVID-19. The school examined in their study was a large-scale public online platform that combined synchronous and asynchronous instruction, a structured curriculum, personalized learning trajectories, interactive support from certified teachers, and tools for continuous progress monitoring. This illustrates the potential advantages of a stable, purpose-designed online model. At the same time, however, this case is only partially comparable with most students in remote formats, given the effects of self-selection, the different level of support, and the absence of direct comparison with in-person peers.

Thus, although online and in-person formats are usually contrasted, the blended format is not simply a middle ground between safety and educational quality. It carries its own risks for learning outcomes, particularly when organization is unstable.

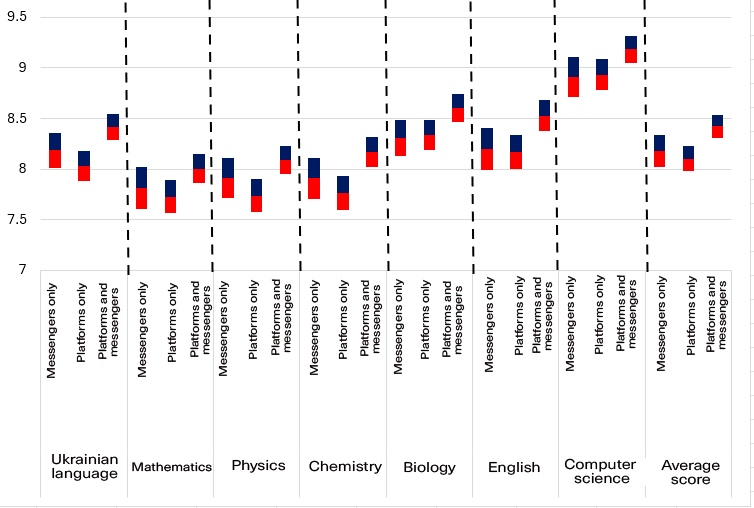

The use of platforms is not linked to students’ learning outcomes (Hypothesis 3 not confirmed).

In addition to students’ access to digital devices and the internet, the organization of remote learning through digital tools (platforms and messengers) may also be an important factor. It is therefore useful to examine differences in students’ grades depending on whether they use only platforms, only messengers, or both types of applications to organize their remote learning (see Figure 4).

Figure 4. Average student grades by use of learning platforms

Students who use both platforms and messengers for learning achieve slightly higher scores. However, the difference is statistically significant only in English (see Appendix 2.3). For the average grade, as well as for grades in Ukrainian, physics, and chemistry, the 95% confidence intervals are higher than for students who use only platforms, but they overlap with those who use only messengers. In other subjects, the differences are not statistically significant.

Thus, students who use both platforms and messaging applications indeed perform slightly better, but the greater concern appears to be using platforms alone rather than relying solely on messengers.

Regression analysis (see Table 1, Model 2), which assessed the impact of using online platforms or messengers while controlling for place of residence, type of institution, and other factors, shows that neither students who use only educational platforms nor those who combine platforms with messengers perform significantly differently from those who rely solely on messengers. In other words, the differences observed at the descriptive level disappear once contextual characteristics are taken into account.

This suggests that, among the factors considered in our analysis, basic digital access (availability of devices and internet) and the stability of the learning format have the strongest impact on outcomes. By contrast, the choice of communication tool—platform or messenger—showed no significant effect.

Table 1. Regression models of the impact of learning format, digital access, and platform use on educational outcomes (dependent variable: student average grade, calculated as the mean of grades in Ukrainian language, mathematics, physics, chemistry, biology, English, and computer science)

| Model 1 (learning format) | Model 2 (learning format, device access, and use of online platforms) | |

| Learning conditions | ||

| Format (reference category: remote) | ||

| In-person | -0.158* | – |

| Blended | -0.408*** | -0.328*** |

| Device access (reference category: phone only) | ||

| No devices ♱ | – | -1.069* |

| Family computer / tablet | – | 0.581*** |

| Own computer / tablet | – | 0.767*** |

| Communication with teacher (reference category: messengers only) | ||

| Platforms only | – | -0.133 |

| Platforms + messengers | – | 0.012 |

| Stable internet connection | – | 0.038 |

| Type of educational institution (reference category: general secondary school) | ||

| Gymnasium | 0.097 | 0.204 |

| Lyceum | 0.196*** | 0.247** |

| Specialized school | -0.204 | -0.091 |

| Other | -0.069 | 0.001 |

| Student characteristics | ||

| Gender: female | 0.604*** | 0.590*** |

| Age (years) | 0.035 | 0.017 |

| Place of residence and family situation | ||

| Family changed place of residence after the full-scale invasion | -0.052 | 0.061 |

| Family member in the Armed Forces of Ukraine (AFU) | -0.231*** | -0.306*** |

| Parental education (reference category: secondary only) | ||

| Vocational / specialized | 0.376*** | 0.337*** |

| Higher | 1.108*** | 0.992*** |

| Family financial status (reference category: very poor) | ||

| Poor | 0.112 | 0.174 |

| Average | 0.291** | 0.304* |

| Well-off | 0.445*** | 0.467** |

| Very well-off | 0.609** | 0.428 |

| Place of residence (reference category: regional center) | ||

| City (non-regional center) | -0.046 | -0.065 |

| Village | 0.104 | 0.052 |

| Township | -0.136 | -0.087 |

| Regions (reference category: Vinnytsia) | included | included |

| Observations | 4,822 | 1893 |

| R2 | 0.165 | 0.207 |

| Adjusted R2 | 0.157 | 0.186 |

| Constant | 6.825*** | 5.588*** |

*p<0.05; ** p<0.01; *** p<0.001. Full results table available here

♱The survey was conducted online, so families of these students must have had at least some devices. However, access may have been minimal, making it impossible to classify them as learning devices.

Among the control factors, girls score higher (by 0.60 points), and students attending lyceums achieve grades 0.19 points above those of general secondary school students. A family’s stronger financial standing is likewise associated with higher average scores. Family background also matters: parents’ higher education has a positive effect on students’ performance, whereas having a relative serving in the Armed Forces of Ukraine is linked to lower grades, likely due to stress. Settlement type is not a significant factor, and only a few regions emerged as “special cases” across the different models.

Conclusions and discussion

Our study tested three hypotheses concerning the relationship between the characteristics of remote learning and students’ educational outcomes.

Learning format. The hypothesis that students in blended formats would perform better than those online but worse than those in person was not confirmed. Instead, the blended format proved to be the least effective, while the differences between online and in-person learning were generally small.

Access to digital devices. The hypothesis that having a personal or family computer/tablet is associated with higher performance was confirmed. Students with their own laptop, computer, or tablet achieve better results than those who rely only on family devices or a personal smartphone. The complete absence of devices is associated with the greatest learning losses, although this is rare (about 2% of our sample).

Combination of platforms and messaging applications. The hypothesis that combining platforms with messengers brings advantages was not confirmed. Once contextual factors are controlled for, the differences between groups disappear.

Our findings align with international studies showing weaker outcomes in blended learning formats (Kleinke & Cross, 2022) and underscoring the importance of digital infrastructure (Engzell et al., 2020; Kennedy & Strietholt, 2023). At the same time, evidence from the State Service of Education Quality (2024) and PISA-2022 likewise highlights both the weaknesses of the blended approach and the strong impact of device access in the Ukrainian context. Taken together, these findings confirm that digital inequality is one of the key drivers of differences in learning outcomes.

The reasons for lower outcomes among students in blended formats remain open to debate. This article considers the possibility that they result from the instability of the format, but an alternative view is that in-person assessments within the blended model more effectively expose the shortcomings of remote learning and reduce opportunities for cheating.

The practical significance of these findings lies in two main points. First, the lower outcomes of blended formats compared to online learning suggest that blended instruction is not simply an intermediate model between in-person and online learning but has its own specific characteristics that warrant further study. Second, the reduction of learning losses depends primarily on providing students with devices and stable internet access, while the choice of platform itself plays only a secondary role.

Limitations of the study

Our study has several important limitations.

First, our study is correlational and relies on cross-sectional data. While we compared groups of students by learning format and controlled for other variables, we cannot assert a causal effect of format on performance. The findings should therefore be interpreted as associations that require further testing in research designs capable of identifying causality, such as panel or experimental studies.

Second, we used school grades as an indicator of students’ knowledge. In the Ukrainian context, grades may be “norm-referenced”: rather than being based on unified criteria, they often reflect the internal standards of a particular school. As a result, identical scores across institutions are not fully comparable. Similar challenges are observed elsewhere; for example, in the United States, only 43% of grades align with standardized tests, while nearly 60% are either inflated or deflated (Crescendo Education Group, 2024). In addition, in our study, grades were self-reported by students, which may introduce bias due to social desirability or recall errors.

Third, the survey sample has several important limitations. The study did not include adolescents living in temporarily occupied territories, in areas without access to communication, or abroad. During the telephone contact stage, only about 11,000 parents out of more than 480,000 dialed numbers agreed to participate, which may have led to an overrepresentation of more engaged and better-resourced families. Moreover, only half of the children whose parents consented actually completed the online survey, adding another layer of self-selection and increasing the risk of systematic bias.

Fourth, student performance is influenced by many different factors—from motivation and classroom climate to family income and the quality of teaching staff. The purpose of our analysis, however, was not to provide an exhaustive explanation of all possible influences, but rather to evaluate the impact of the specific variables defined in our hypotheses (learning format, digital access, internet, and use of platforms).

Fifth, in the section on platform use we did not distinguish between synchronous video platforms that enable real-time interaction (such as Google Meet, Zoom, etc.). This may have led us to underestimate the specific role of synchronous tools in remote learning.

Finally, the impact of remote and blended learning extends beyond educational outcomes. These formats may also have important implications for students’ psychological well-being and social development, but these aspects were outside the scope of our study and require separate investigation.

The authors extend special thanks to Ilona Sologoub, Vasyl Tereshchenko, Danylo Karakai, and Myroslava Savisko for editing and commenting on the article at various stages.

Appendix 1. Summary characteristics of the student sample

Figure D1.1

Figure D1.2

Figure D1.3

Note. Data (tables) for Appendices 1 and 2 are available here

Appendix 2. Dependence of grades on learning organization parameters

Figure D2.1. 95% confidence intervals of students’ grades, by learning format, across subject

Figure D2.2. 95% confidence intervals of students’ grades, by learning format and availability of digital devices, across subjects

Figure D2.3. 95% confidence interval of students’ grades, by platform use, across subjects

The average score is shown at the boundary between the blue and red sections of the interval

Appendices 3.1–3.3 are available at the following links:

Appendix 3.1. Regression results by subject (Model 1)

Appendix 3.2. Regression results by subject (Model 2)

[1] The dataset on the number of students in schools and their mode of study as of January 1, 2025, was obtained from the Ministry of Education and Science of Ukraine (MES) upon request.

Photo: depositphotos.com/ua

Attention

The authors do not work for, consult to, own shares in or receive funding from any company or organization that would benefit from this article, and have no relevant affiliations