Mitchels versus Machines, Love Death + Robots, Diia Summit. In May, Ukrainians were bombarded by the content about artificial intelligence and automated machines. President Volodymyr Zelenskiy himself said that “Digital transformation is the key to fight corruption. Computers do not have friends or kin. Computers do not take bribes even with bitcoins. Artificial intelligence knows a thousand languages but it does not understand what a deal or a favor means”.

What do Ukrainians think of AI? Do they agree that AI can replace judges or soldiers? And do Ukrainians have better faith in AI than in regular automated machines, i.e. robots. Our team conducted a survey experiment in 2020 to investigate this question.

What is the difference between robots and AI?

A common approach is to define robots as machines that do not necessarily require intelligence to interact with a physical world by executing certain tasks (industrial machinery, medical robotic arms etc.). Robots are usually programmed and can be autonomous or semi-autonomous. In contrast, AI does not require a physical manifestation because it is rather a branch of computer science concerned with algorithms to solve complicated problems which usually require human-level intelligence.

People often confuse these two. Surveys demonstrate that people sometimes use these terms interchangeably: for instance, 25% of British respondents define AI as robots. Similarly, 35% of urban Ukrainian respondents associate AI with “robots and robotics”. The question we ask is whether Ukrainians believe that AI can have different social roles in the society when compared to robots.

How did we collect the data? We collected new polling data using the Gradus application. This is an online panel which recruits urban Ukrainians aged 18-60 who live in cities with a population of 50,000 or more. Hence, the trends discussed below may not be generalizable to older and/or rural citizens. Nevertheless, these data have enough variation in education, age and occupational status in order to include respondents who are likely to vary regarding their experiences with modern technologies. Needless to say, all of them have smartphones (since Gradus is the smartphone application) and thus have some basic experience of using gadgets. Our fieldwork lasted from February 28 to March 1, 2020 collecting 1,000 answers.

Experiment. We randomly assigned “robots” or “AI” to otherwise same questions testing if individuals have different perceptions of these technologies in the various domains. As we are interested more in social than personal dimensions of the AI/robot-human interaction and co-existence, we ask the respondents to evaluate whether AI/robots can act either in decision-making positions in various domains (legal, economic, moral etc.) or manifest in the ways traditionally labeled as ‘essentially human’ (being emotional or creative). Then, we asked our respondents whether they believe robots or AI can take certain roles in society. Responses range was from 1 = strongly disagree to 5 = strongly agree:

- Q1. Robots/AI can take human jobs

- Q2. Robots/AI can help humans at their workplace

- Q3. Robots/AI can have emotions

- Q4. Robots/AI can write music

- Q5. Robots/AI can cure people

- Q6. Robots/AI can write legislation

- Q7. Robots/AI can raise children

- Q8. Robots/AI can replace soldiers in army

- Q9. Robots/AI can lead the army

- Q10. Robots/AI can work as judges in courts

- Q11. Robots/AI can work as priests

Results

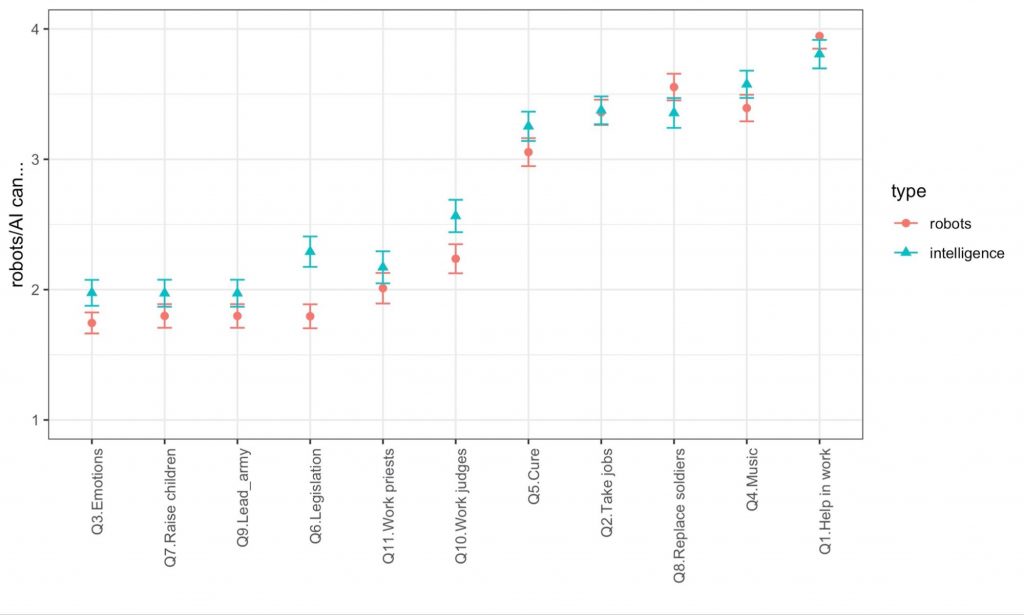

Figure 1 shows an interesting pattern of variation in how strongly respondents approved that robots/AI could play a certain role in society. Most of the respondents ranked AI slightly higher (with some exceptions which we will discuss later).

Figure 1. Average views towards robots/AI on the 5-point scale

Source: Gradus survey, own calculations. Higher values mean that people believe that robots/AI are more likely to do this function.

To summarize the findings:

- Most of the respondents do not agree with the idea that robots/AI can feel emotions, raise children, lead armies, write legislation, and replace people as priests or judges. This is not surprising, given that emotions are typically conserved to be uniquely human. The same goes for raising children which in most human cultures is associated with significant emotional involvement, care, love, and empathy.

- It seems that Ukrainians cannot allow robots/AI to be responsible for serious moral decisions involving life and death. People do not want to delegate robots/AI important decisions on how to govern societies, which wars to lead, and how to communicate with spiritual realms. Moreover, army generals, judges, and priests are considered to be high status individuals, thus it is quite likely that people do not want to consider robots/AI to be higher on the social ladder.

- In sharp contrast, respondents tend to agree that robots/AI can cure people, take their jobs, replace soldiers, write music, and help to do their work. In all cases, robots/AI have already penetrated these realms of human activities. Moreover, in all cases, these activities do not involve complicated moral decisions and rather rely on complex algorithms and textbook instructions.

- We also applied statistical models to analyze the data and found that younger and wealthier respondents were more likely to support the idea that robots and AI can have humankind features and perform human tasks. Education, however, did not play a significant role. We found that indeed respondents tend to differentiate between robots and AI in almost all domains. The gap was very narrow for questions related to the job market. In other words, people believe that both robots and AI are likely to influence their workplaces.

- Surprisingly, we did not find that people believed that robots are more responsible for routine tasks whereas AI is more responsible for non-routine tasks. Instead, our survey experiment showed that people almost always tend to give more weight to AI than robots regardless of a task or feature.

Conclusions

In contrast to what Zelenskiy believes, Ukrainians do not believe that robots/AI can be responsible for serious moral decisions involving life and death, such as how to govern the society, or which wars to lead. Yet, respondents rank robots/AI as more capable of being a judge than authoring a piece of legislation (even though both of these items are related to the same realm). It could be the case that Ukrainians indeed (as Volodymyr Zelenskiy believes) would like to eliminate the human factor — corruption and nepotism — by delegating this part of the work to robots/AI. However, it is still a hypothesis, which we are investigating in our ongoing study on AI narratives in Ukraine.

Attention

The authors do not work for, consult to, own shares in or receive funding from any company or organization that would benefit from this article, and have no relevant affiliations